Highlights

OpenAI has announced GPT-4, the latest version of its hugely popular artificial intelligence chatbot ChatGPT.

- GPT-4 is a more advanced next-generation language model that is more capable of performing complex language tasks, similar to how GPT-3 and ChatGPT can understand and generate human-like language.

- GPT-4 will initially be available to ChatGPT Plus subscribers, who pay $20 per month for premium access to the service.

- In this article, we are sharing all details related to GPT-4 along with the difference between ChatGPT & GPT-4, ChatGPT Plus subscriptions, ChatGPT visual and more.

Microsoft-backed OpenAI, the American artificial intelligence research laboratory, recently announced the new version of their language model called GPT-4.

This model has been claimed to be even more advanced than the current ChatGPT and will be able to perform even more complex language tasks.

As per OpenAI’s announcement, it will have a larger capacity to learn and process information, and will likely have more advanced features such as a better understanding of context and improved natural language generation capabilities.

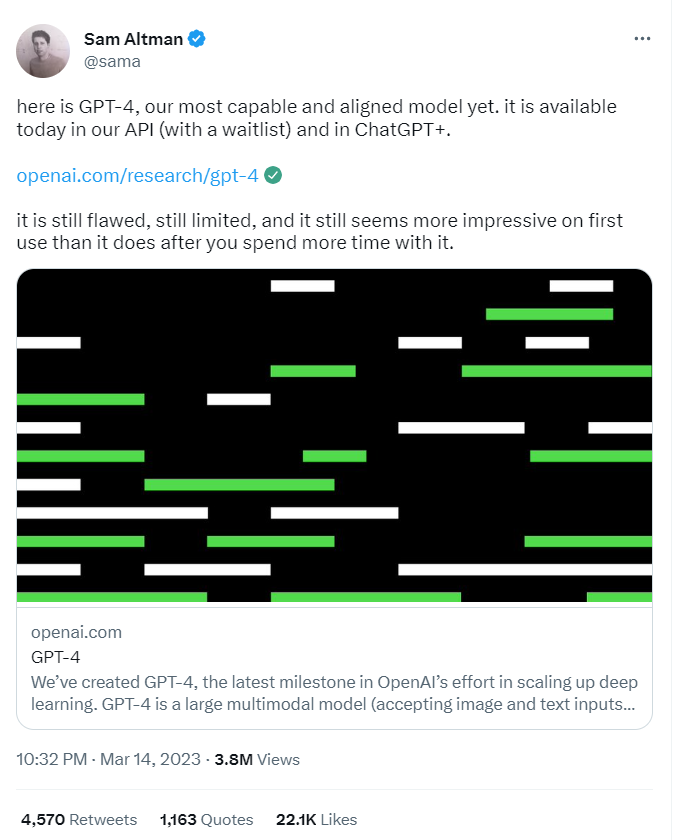

The company CEO Sam Altman recently tweeted the GPT-4 official announcement blog on their official Twitter handle. Altman tweeted, “Here is GPT-4, our most capable and aligned model yet. It is available today in our API (with a waitlist) and in ChatGPT+. It is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.”

He further extended the tweet thread and added, “It is more creative than previous models, it hallucinates significantly less, and it is less biased. it can pass a bar exam and score a 5 on several AP exams. There is a version with a 32k token context. We are previewing visual input for GPT-4; we will need some time to mitigate the safety challenges. we now support a “system” message in the API that allows developers (and soon ChatGPT users) to have significant customization of behaviour. if you want an AI that always answers you in the style of Shakespeare, or in json, now you can have that.”

GPT-4, similar to ChatGPT, is a creation of generative artificial intelligence that uses algorithms and predictive text in order to generate intelligent responses based on prompts.

Altman also added in a series of tweets, “We are open-sourcing OpenAI Evals, our framework for automated evaluation of AI model performance, to allow anyone to help improve our models. we have had the initial training of GPT-4 done for quite a while, but it’s taken us a long time and a lot of work to feel ready to release it. we hope you enjoy it and we really appreciate feedback on its shortcomings.”

OpenAI has also announced new partnerships with language learning apps Duolingo and Be My Eyes. With these new partnerships, the company is hoping to create AI Chatbots which can assist their users using natural language.

In this article, we are sharing all details related to GPT-4 along with the difference between ChatGPT & GPT-4, ChatGPT Plus subscriptions, ChatGPT visual and more.

What is GPT-4?

As per OpenAI’s recent announcement, GPT-4 is the recently released version of OpenAI’s language model, which is being developed as a successor to ChatGPT. It will be an even more advanced model that is capable of performing complex language tasks, similar to how GPT-3 and ChatGPT can understand and generate human-like language.

GPT-4 will initially be available to ChatGPT Plus subscribers, who pay $20 per month for premium access to the service.

The latest version of the bot has “more advanced reasoning skills” as compared to ChatGPT, OpenAI claims. The language model, for example, can find available meeting times for three schedules.

Nonetheless, OpenAI has also warned of a few shortcomings. Similar to its predecessors, OpenAI believed GPT-4 too is still not completely reliable functionally.

The company has warned users that the program may “hallucinate” at times. “Hallucination” in artificial intelligence is a phenomenon where the AI at times invents facts or can even commit reasoning errors.

What is a ChatGPT Plus Subscription?

ChatGPT Plus is a premium version of the ChatGPT API that provides additional features and functionality beyond the standard API. Some of the benefits of ChatGPT Plus include faster response times, higher message limits, and access to more advanced language processing capabilities.

To access ChatGPT Plus, users must have an OpenAI API key and be subscribed to the Plus plan, which requires payment of a monthly subscription fee.

The Plus plan is intended for users with more demanding use cases and higher volumes of requests.

Altman also tweeted this week that OpenAI loves India and shared the details about the ChatGPT Plus subscription.

Altman tweeted “we love India” while quote tweeting OpenAI’s official Twitter page that tweeted, “Great news! ChatGPT Plus subscriptions are now available in India. Get early access to new features, including GPT-4 today: “

It’s worth noting that the availability of ChatGPT Plus and its specific features may be subject to change, so interested users should check the OpenAI website for the most up-to-date information.

What is ChatGPT?

ChatGPT is a powerful natural language processing tool developed by OpenAI, a leading artificial intelligence research organization.

This tool is designed to understand and generate human-like language, making it an invaluable resource for a wide range of applications, from chatbots and customer service to content creation and language translation.

What sets ChatGPT apart is its ability to learn from vast amounts of text data using unsupervised learning, meaning it can understand and generate language without being explicitly trained on specific language tasks.

This allows ChatGPT to generate coherent language responses that are remarkably similar to what a human might say.

With ChatGPT, businesses and developers can easily integrate advanced language processing capabilities into their products and services, enabling them to better understand and communicate with their customers.

Whether you’re looking to build a chatbot, improve customer service, or create compelling content, ChatGPT can help you achieve your goals with its advanced natural language processing capabilities.

What is the Difference Between ChatGPT and GPT-4?

One major difference between GPT-4 and ChatGPT is that GPT-4 will likely have a significantly larger model size, which will enable it to process more data and perform more complex tasks.

It will also have more advanced natural language processing capabilities, such as a better understanding of context and semantics.

Additionally, GPT-4 will have improved training methods and architecture, which will enable it to learn from even larger amounts of data and generate more accurate and coherent language responses.

Overall, GPT-4 represents a significant step forward in the development of AI language models and is expected to have a significant impact on a wide range of industries and applications.

Sure, here’s a table summarizing some of the key differences between ChatGPT and GPT-4:

| Features | ChatGPT | GPT-4 |

| Model Size | Relatively small compared to GPT-3,

Can handle 4,096 tokens or around 8,000 words |

Expected to be significantly larger than GPT-3,

Can handle up to 32,768 tokens or around 64,000 words |

| Natural Language Processing

|

Can understand and generate human-like language | Expected to have more advanced NLP capabilities, such as better understanding of context and semantics |

| Training Methods

|

Trained on large amounts of text data using unsupervised learning | Expected to have improved training methods and architecture, enabling it to learn from even larger amounts of data |

| Accuracy and Coherency

|

Can generate coherent language responses, but may sometimes produce irrelevant or nonsensical responses | Expected to have improved accuracy and coherency in generating language responses |

| Potential Applications

|

Chatbots, customer service, language translation, content creation | Wide range of applications, including language translation, content creation, natural language understanding, and more |

Keep in mind that GPT-4 is still going through changes, so some of these details may change or be refined as more information becomes available.

What Is Visual ChatGPT?

Visual ChatGPT is a cutting-edge technology that combines natural language processing with computer vision to create a more immersive and interactive chatbot experience.

With Visual ChatGPT, users can engage in conversations with a virtual assistant that can understand both text and images, making it easier to communicate and receive information.

The technology behind Visual ChatGPT is based on OpenAI’s advanced language processing capabilities, which enable the chatbot to understand and generate human-like language responses.

In addition, Visual ChatGPT uses computer vision algorithms to analyze and interpret images, allowing the chatbot to understand visual inputs as well.

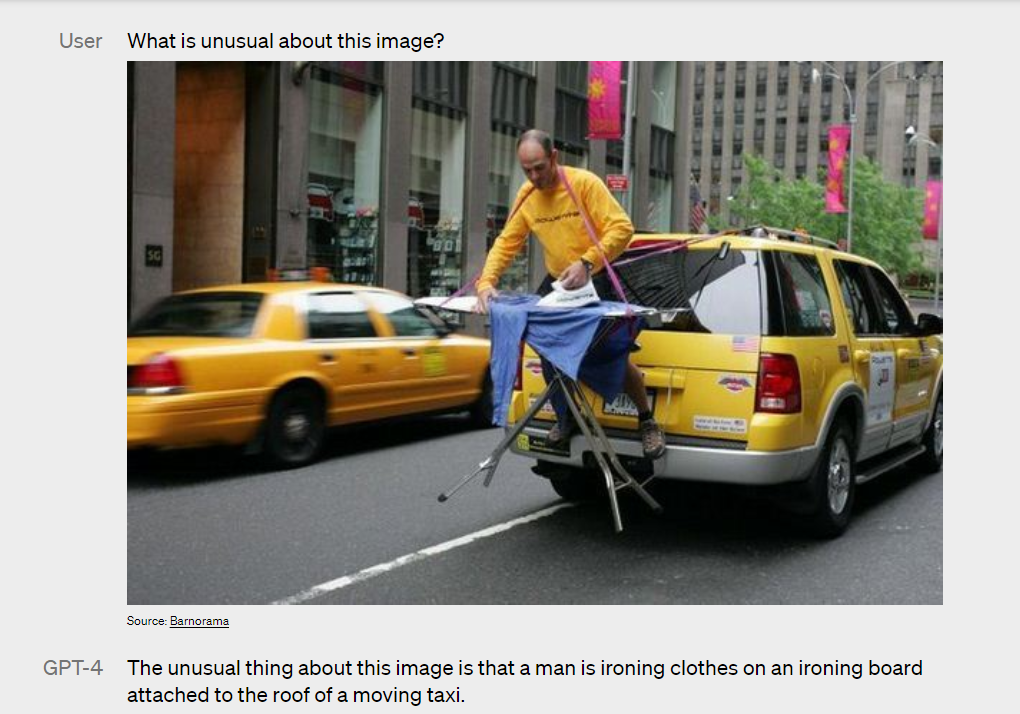

Here is an example of Visual inputs on ChatGPT

Visual ChatGPT has a wide range of potential applications, from customer service and e-commerce to education and entertainment.

For example, a visual chatbot could help users find products by analyzing an image of the product they’re looking for or provide educational resources by answering questions based on visual inputs.

Overall, Visual ChatGPT is an exciting development in the field of natural language processing and computer vision and has the potential to revolutionize the way we interact with virtual assistants.

How To Use Visual ChatGPT?

To use Visual ChatGPT, simply navigate to a website or app that has integrated the technology and start a conversation with the virtual assistant.

Once you’ve initiated the chat, you can enter text-based questions or provide images as inputs to get a response from the chatbot.

Here are quick pointers on how to use Visual ChatGPT:

- Navigate to a website or app that has integrated Visual ChatGPT technology.

- Initiate a chat with the virtual assistant by clicking on the chatbot icon or typing in a text-based message.

- Provide text-based questions or upload images as inputs to get a response from the chatbot.

- When providing visual inputs, select clear and relevant images that the chatbot can use to understand your query.

- Engage with the virtual assistant by answering follow-up questions or providing additional information as needed.

- Visual ChatGPT will use its advanced language processing and computer vision capabilities to understand your queries and provide relevant responses.

- Continue the conversation until you have received the information or assistance you need.

Overall, using Visual ChatGPT is a simple and straightforward process that can enhance your chatbot experience and enable you to communicate with virtual assistants in a more natural and interactive way.

FAQs on ChatGPT

Q1. What is the difference between GPT-3 and 4?

Answer. As OpenAI’s most advanced system, GPT-4 surpasses older versions of the models in almost every area of comparison.

It is more creative and more coherent than GPT-3. It can process longer pieces of text or even images.

It’s more accurate and less likely to make “facts” up.

Q2. Is GPT-4 free?

Answer. No, Only ChatGPT Plus subscribers will get GPT-4 access on chat.openai.com with a usage cap.

OpenAI says it will adjust the exact usage cap depending on demand and system performance in practice, but they expect to be severely capacity constrained.

So, as of now, the only way to access the text-input capability through OpenAI is with a subscription to ChatGPT Plus, which guarantees subscribers access to the language model at the price of $20 a month.

Q3. Can GPT-4 generate images?

Answer. OpenAI claims that the next-generation ChatGPT known as GPT-4 contains big improvements.

It has already stunned people with its ability to create human-like text and generate images and computer code from almost any prompt.

Q4. Where can I use ChatGPT 4?

Answer. To get to ChatGPT-4, you will need the paid version of the site, ChatGPT+. Open up the ChatGPT site, at chat.openai.com.

If you already have access to ChatGPT+, then the site will take you directly to ChatGPT4.

Q5. Will GPT-4 have any aspect of Steerability?

Answer. OpenAI, in its GPT-4 announcement blog, stated, “We’ve been working on each aspect of the plan outlined in our post about defining the behaviour of AIs, including steerability. Rather than the classic ChatGPT personality with a fixed verbosity, tone, and style, developers (and soon ChatGPT users) can now prescribe their AI’s style and task by describing those directions in the “system” message. System messages allow API users to significantly customize their users’ experience within bounds. We will keep making improvements here (and particularly know that system messages are the easiest way to “jailbreak” the current model, i.e., the adherence to the bounds is not perfect), but we encourage you to try it out and let us know what you think.”

Q6. What are some limitations of GPT-4?

Answer. Despite its capabilities, GPT-4 has similar limitations as earlier GPT models. Most importantly, it still is not fully reliable (it “hallucinates” facts and makes reasoning errors).

Great care should be taken when using language model outputs, particularly in high-stakes contexts, with the exact protocol (such as human review, grounding with additional context, or avoiding high-stakes uses altogether) matching the needs of a specific use case.

Q7. WHAT IS THE DIFFERENCE BETWEEN GPT-4 AND GPT-3.5?

Answer. GPT-3.5 takes only text prompts, whereas the latest version of the large language model can also use images as inputs to recognize objects in a picture and analyze them.

GPT-3.5 is limited to about 3,000-word responses, while GPT-4 can generate responses of more than 25,000 words.

GPT-4 is 82% less likely to respond to requests for disallowed content than its predecessor and scores 40% higher on certain tests of factuality.

It will also let developers decide their AI’s style of tone and verbosity.

For example, GPT-4 can assume a Socratic style of conversation and respond to questions with questions.

The previous iteration of the technology had a fixed tone and style. Soon ChatGPT users will have the option to change the chatbot’s tone and style of responses, OpenAI said.

Q8. WHAT ARE THE CAPABILITIES OF GPT-4?

Answer. The latest version has outperformed its predecessor in the U.S. bar exam and the Graduate Record Examination (GRE).

GPT-4 can also help individuals calculate their taxes, a demonstration by Greg Brockman, OpenAI’s president, showed.

The demo showed it could take a photo of a hand-drawn mock-up for a simple website and create a real one.

Be My Eyes, an app that caters to visually impaired people, will provide a virtual volunteer tool powered by GPT-4 on its app.

Q9. WHAT ARE THE LIMITATIONS OF GPT-4?

Answer. According to OpenAI, GPT-4 has similar limitations as its prior versions and is

“less capable than humans in many real-world scenarios”.

Inaccurate responses known as “hallucinations” have been a challenge for many AI programs, including GPT-4.

OpenAI said GPT-4 can rival human propagandists in many domains, especially when teamed up with a human editor.

It cited an example where GPT-4 came up with suggestions that seemed plausible, when it was asked about how to get two parties to disagree with each other.

OpenAl Chief Executive Officer Sam Altman said GPT-4 was “most capable and aligned” with human values and intent, though “it is still flawed.”

GPT-4 generally lacks knowledge of events that occurred after September 2021, when the vast majority of its data was cut off. It also does not learn from experience.

Q10. WHO HAS ACCESS TO GPT-4?

Answer. While GPT-4 can process both text and image inputs, only the text-input feature will be available to ChatGPT Plus subscribers and software developers, with a waitlist, while the image-input ability is not publicly available yet.

The subscription plan, which offers faster response time and priority access to new features and improvements, was launched in February and costs $20 per month.

GPT-4 powers Microsoft’s Bing AI chatbot and some features on language learning platform Duolingo’s subscription tier.

Q11. What’s New in GPT-4?

Answer. GPT-4 is a new language model created by OpenAI that can generate text that is similar to human speech.

It advances the technology used by ChatGPT, which is currently based on GPT-3.5.

GPT is the acronym for Generative Pre-Trained Transformer, a deep learning technology that uses artificial neural networks to write like a human.

According to OpenAI, this next-generation language model is more advanced in three key areas: creativity, visual input, and longer context.

In terms of creativity, OpenAI says GPT-4 is much better at both creating and collaborating with users on creative projects.

Examples of these include music, screenplays, technical writing, and even “learning a user’s writing style.”

The longer context plays into this as well. GPT-4 can now process up to 25,000 words of text from the user.

You can even just send GPT-4 a web link and ask it to interact with the text from that page.

OpenAI says this can be helpful for the creation of long-form content, as well as “extended conversations.”

GPT-4 can also now receive images as a basis for interaction.

In the example provided on the GPT-4 website, the chatbot is given an image of a few baking ingredients and is asked what can be made with them.

It is not currently known if video can also be used in this same way.

OpenAI says it’s been trained with human feedback to make these strides, claiming to have worked with “over 50 experts for early feedback in domains including AI safety and security.”

As the first users have flocked to get their hands on it, we’re starting to learn what it’s capable of.

One user made GPT-4 create a working version of Pong in just sixty seconds, using a mix of HTML and JavaScript.

Q12. How to Use GPT-4?

Answer. The easiest way to get started with GPT-4 today is to try it out as part of Bing Chat.

Microsoft revealed that it’s been using GPT-4 in Bing Chat, which is free to use. Some GPT-4 features are missing from Bing Chat, however, such as visual input.

But you’ll still have access to that expanded LLM & the advanced intelligence that comes with it.

It should be noted that while Bing Chat is free, it is limited to 15 chats per session and 150 sessions per day.

The only other way to access GPT-4 right now is to upgrade to ChatGPT Plus.

To jump up to the $20 paid subscription, just click on “Upgrade to Plus” in the sidebar in ChatGPT.

Once you’ve entered your credit card information, you’ll be able to toggle between GPT-4 and older versions of the LLM.

You can even double-check that you’re getting GPT-4 responses since they use a black logo instead of the green logo used for older models.

From there, using GPT-4 is identical to using ChatGPT Plus with GPT-3.5. It’s more capable than ChatGPT and allows you to do things like fine-tune a dataset to get tailored results that match your needs.

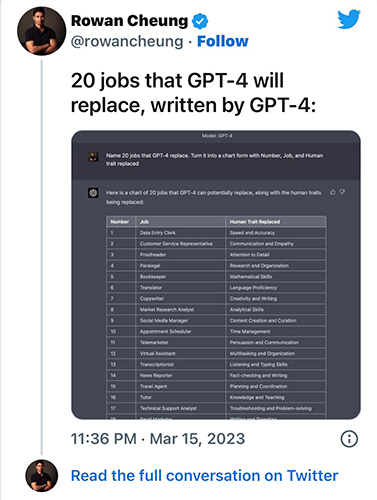

Q13. What are Jobs taken Up by AI ?

Answer. The 20 jobs that will be taken up by AI Prashanth Rangaswamy, a Twitter user, prompted GPT-4 to identify 20 current day jobs that it could replace.

He then asked the AI to plot the response in the form of ‘number, position, and human characteristic replace,’ and the result is literally shocking.

The 20 jobs that it can replace, in the order of how easy it will be for ChatGPT to replace, are:

* Data Entry Clerk

* Customer Service Representative

* Proofreader

* Paralegal

* Bookkeeper

* Translator

* Copywriter

* Market Research Analyst

* Social Media Manager

* Appointment Scheduler

* Telemarketer

* Virtual Assistant

* Transcriptionist

* News Reporter

* Travel Agent

* Tutor

* Technical Support Analyst

* Email Marketer

* Content Moderator

* Recruiter

Not only that, but GPT-4-enabled ChatGPT has also disclosed the human characteristics that it can replace in these positions.

These traits include speed and accuracy, communication and empathy, attention to detail, research and organization, mathematical skills, language proficiency, creativity and writing, analytical skills, content creation and curation, time management, persuasion and communication, multitasking and organization, listening and typing skills, fact-checking and writing, planning and coordination, knowledge and teaching, troubleshooting and problem-solving, writing and targeting, critical thinking and judgement, interviewing and assessment.

Recently, when OpenAI launched GPT-4 and demoed what all it was capable of, Twitter CEO Elon Musk put out a tweet, asking “What will be left for us people to do? We’d better get Neuralink going,”.

Q14. How AI started taking human jobs weeks before GPT-4 launch?

Answer. According to a recent poll of 1,000 business leaders conducted by Resumebuilder.com, roughly half of US businesses have adopted ChatGPT in their company in some way or the other, and have started to replace their workers with the AI chatbot, and either “release” them or resituate them within the company.

This was before OpenAI released GPT-4, so the number of businesses going for AI-based resources is only going to increase.

When Open AI released the enhanced edition of ChatGPT, which is powered by GPT-4, it was asked whether it ever considered how many jobs was it going to have an effect on.

However, OpenAI has stated that the AI chatbot cannot replace humans and is designed to assist people in their jobs.

Q15. Can you try GPT-4 right now?

Answer. GPT-4 has already been integrated into products like Duolingo, Stripe, and Khan Academy for varying purposes. While it’s yet to be made available for all for free, a $20 per month ChatGPT Plus subscription can fetch you immediate access.

The free tier of ChatGPT, meanwhile, continues to be based on GPT-3.5.

However, if you don’t wish to pay, then there’s an ‘unofficial’ way to begin using GPT-4 immediately.

Microsoft has confirmed that the new Bing search experience now runs on GPT-4 and you can access it from bing.com/chat right now.

Meanwhile, developers will gain access to GPT-4 through its API. A waitlist has been announced for API access, and it will begin accepting users later this month.

Q16. How is GPT-4 different from GPT-3?

Answer. GPT-4 can ‘see’ images now: The most noticeable change to GPT-4 is that it’s multimodal, allowing it to understand more than one modality of information. GPT-3 and ChatGPT’s GPT-3.5 were limited to textual input and output, meaning they could only read and write.

However, GPT-4 can be fed images and asked to output information accordingly. If this reminds you of Google Lens, then that’s understandable.

But Lens only searches for information related to an image. GPT-4 is a lot more advanced in that it understands an image and analyses it.

An example provided by OpenAI showed the language model explaining the joke in an image of an absurdly large iPhone connector. The only catch is that image inputs are still a research preview and are not publicly available.

GPT-4 is harder to trick: One of the biggest drawbacks of generative models like ChatGPT and Bing is their propensity to occasionally go off the rails, generating prompts that raise eyebrows, or worse, downright alarm people. They can also get facts mixed up and produce misinformation.

OpenAl says that it spent 6 months training GPT-4 using lessons from its “adversarial testing program” as well as ChatGPT, resulting in the company’s “best-ever results on factuality, steerability, and refusing to go outside of guardrails.”

GPT-4 can process a lot more information at a time: Large Language

Models (LLMs) may have been trained on billions of parameters, which means countless amounts of data, but there are limits to how much information they can process in a conversation. ChatGPT’s GPT-3.5 model could handle 4,096 tokens or around 8,000 words but GPT-4 pumps those numbers up to 32,768 tokens or around 64,000 words.

This increase means that where ChatGPT could process 8,000 words at a time before it started to lose track of things, GPT-4 can maintain its integrity over way lengthier conversations. It can also process lengthy documents and generate long-form content – something that was a lot more limited on GPT-3.5.

GPT-4 has an improved accuracy: OpenAI admits that GPT-4 has similar limitations as previous versions – it’s still not fully reliable and makes reasoning errors. However, “GPT-4 significantly reduces hallucinations relative to previous models” and scores 40 per cent higher than GPT-3.5 on factuality evaluations. It will be a lot harder to trick GPT-4 into producing undesirable outputs such as hate speech and misinformation.

GPT-4 is better at understanding languages that are not English: Machine learning data is mostly in English, as is most of the information on the internet today, so training LLMs in other languages can be challenging.

But GPT-4 is more multilingual and OpenAl has demonstrated that it outperforms GPT-3.5 and other LLMs by accurately answering thousands of multiple-choice across 26 languages. It obviously handles English best with an 85.5 per cent accuracy, but Indian languages like Telugu aren’t too far behind either, at 71.4 per cent.

What this means is that users will be able to use chatbots based on GPT-4 to produce outputs with greater clarity and higher accuracy in their native languages.

Also Read: Here’s How an Artificial Intelligence Works

Also Read: Google To Soon Integrate Text-to-Image Generator Imagen Into Gboard Android App