Highlights

- NVIDIA unveils HGX H200 GPU, a major upgrade from the H100, featuring enhanced AI training capabilities.

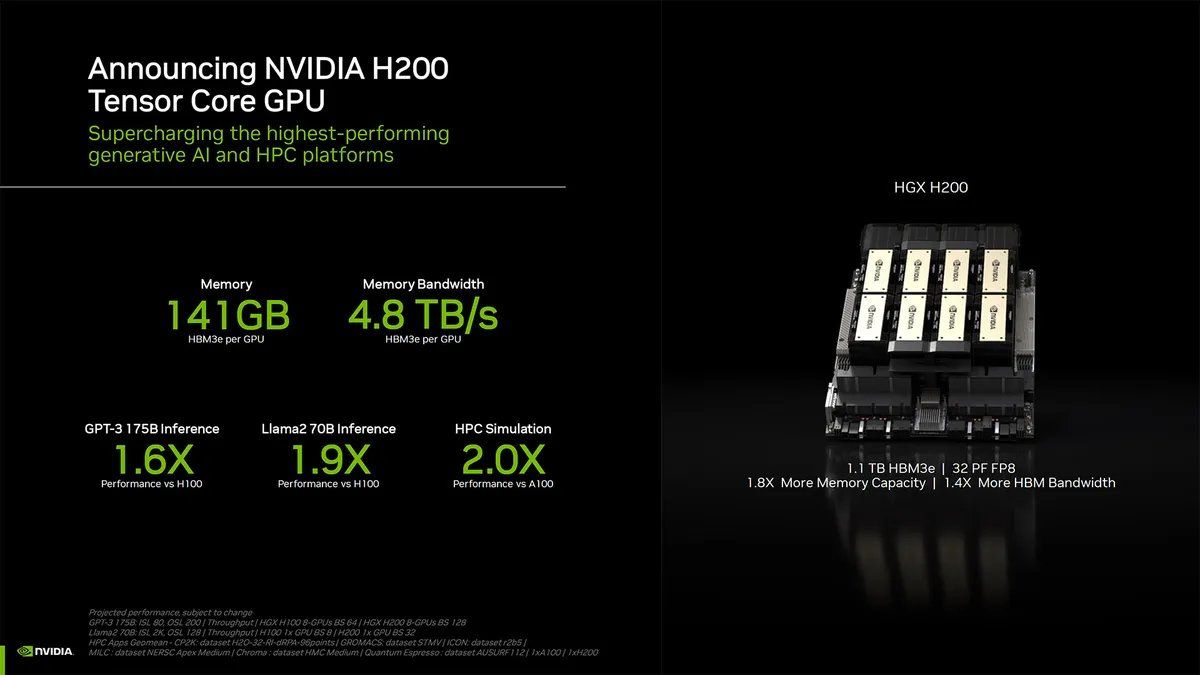

- The H200 leverages Hopper architecture and introduces the H200 Tensor Core GPU with HBM3e memory.

- Cloud giants like Google Cloud, AWS, and Microsoft Azure to deploy H200-based instances in 2024.

NVIDIA has recently announced the launch of the HGX H200 GPU, an advancement over the previous HGX H100.

This new chip, designed primarily for training AI systems, integrates several performance enhancements.

Compatibility with the hardware and software of the H100 systems has been maintained, facilitating a smooth upgrade process.

Deployment and Enhanced Capabilities

The H200, developed on NVIDIA’s Hopper architecture, features the H200 Tensor Core GPU.

Its introduction of HBM3e memory is a standout, providing 141GB at a speed of 4.8 terabytes per second.

This large memory, almost double that of its predecessor, the A100, has been aimed at enhancing the acceleration of generative AI and large language models (LLMs).

The H200’s capabilities are further exemplified by its near doubling of inference speed on complex models such as Llama 2, in comparison to the H100.

Various configurations, including four– and eight-way GPU setups and integration into the NVIDIA GH200 Grace Hopper Superchip with HBM3e, will be offered.

Major cloud providers like Google Cloud, Amazon Web Services, Microsoft Azure, and Oracle Cloud Infrastructure, along with companies such as CoreWeave, Lambda, and Vultr, are set to deploy H200-based instances by 2024.

Technical Specifications and Future Availability

Boasting over 32 petaflops of FP8 deep learning compute and 1.1TB of high-bandwidth memory, the H200’s eight-way configuration represents a significant step in NVIDIA’s chipset technology.

Its availability is anticipated to begin in the second quarter of 2024.

This release, more than a year after the debut of the H100, which was NVIDIA’s inaugural GPU based on the Hopper architecture, shows the company’s continuous evolution in AI and supercomputing technologies.

NVIDIA’s H200 is expected to significantly impact various application workloads, especially in training and inference for large language models exceeding 175 billion parameters.

FAQs

What makes the NVIDIA HGX H200 different from its predecessor, the H100?

The NVIDIA HGX H200 stands out with its Hopper architecture and H200 Tensor Core GPU. It introduces HBM3e memory, providing a substantial memory upgrade with 141GB at 4.8 terabytes per second, almost doubling the capacity of the A100. This makes it more efficient in handling AI and large language models.

When will the NVIDIA HGX H200 be available, and who will be deploying it?

The HGX H200 is expected to be available starting in the second quarter of 2024. Major cloud service providers like Google Cloud, Amazon Web Services, Microsoft Azure, and Oracle Cloud Infrastructure, along with companies such as CoreWeave, Lambda, and Vultr, are set to deploy H200-based instances.

What are the technical capabilities of the NVIDIA HGX H200?

The NVIDIA HGX H200 offers a significant leap in performance, delivering over 32 petaflops of FP8 deep learning compute and 1.1TB of high-bandwidth memory. Its eight-way configuration is particularly powerful for AI training and large language model processing.