Highlights

- The Deepfake threat is moving to the centre of all cyber threats with growing concern of misuse in different spheres of personal and public life.

- FBI and NSA recently released a “Cybersecurity Information Sheet (CSI), Contextualizing Deepfake Threats to Organizations.”

- The document provides a complete overview of synthetic media threats, techniques, and trends.

The risks and threats surrounding the use of AI are becoming more noticeable to common people in more ways than one.

Now, there’s growing concern about how AI, especially deepfakes, can be a threat to important institutions, big companies, and businesses in the real world.

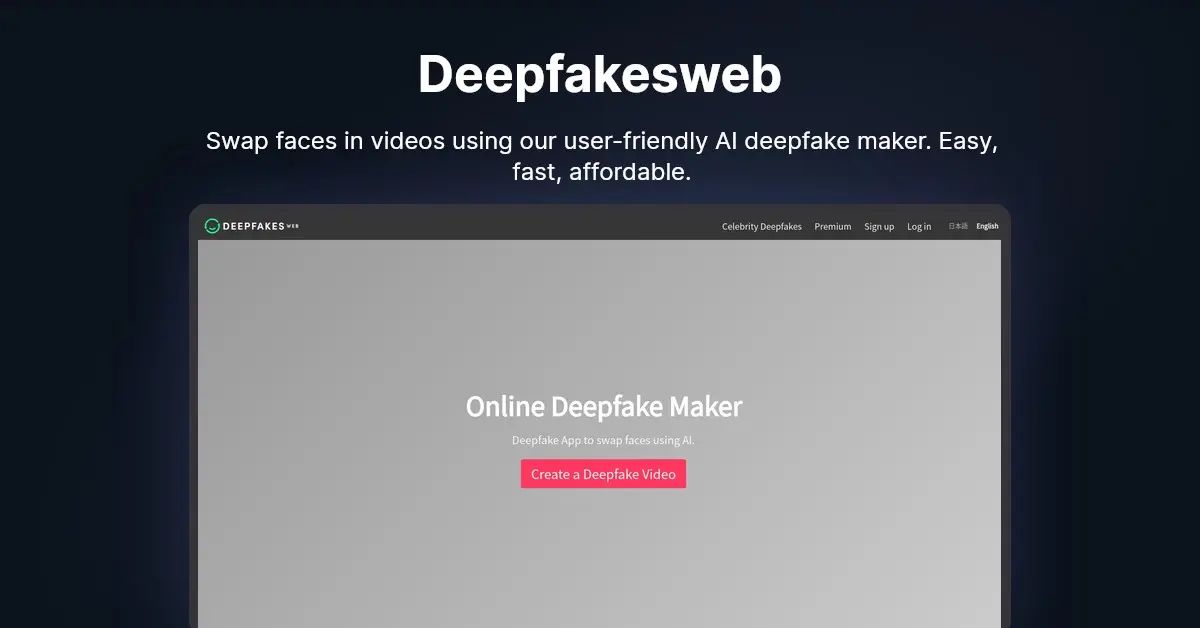

Recently, the National Security Agency (NSA), the Federal Bureau of Investigation (FBI), and the Cybersecurity and Infrastructure Security Agency (CISA) released a “Cybersecurity Information Sheet (CSI), Contextualizing Deepfake Threats to Organizations,” which provides an overview of synthetic media threats, techniques, and trends.

Before we get to the details of the “Cybersecurity Information Sheet (CSI), Contextualizing Deepfake Threats,” let’s try to understand what are deepfakes and how serious the threat is.

What are Deepfakes?

According to the Cybersecurity Information Sheet (CSI), Contextualizing Deepfake Threats, “Deepfakes are AI-generated, highly realistic synthetic media that can be abused to:

- Threaten an organization’s brand

- Impersonate leaders and financial officers

- Enable access to networks, communications, and sensitive information”

The statement also clearly outlines Deepfake social engineering, which includes:

- Fraudulent texts

- Fraudulent voice

- messages

- Faked videos

How Serious Deepfake Threats Are?

A Forbes report reveals that a Hong Kong-based bank manager in early 2020 received a deepfake call of such great quality that he ended up losing $35m (Rs 288.7 crore) of his firm.

Deepfake-related incidents pre-date the birth of ChatGPT and the boom of generative artificial intelligence.

Today, highly sophisticated AI tools are available in the public domain for people, including those with bad intentions, to use it the way they wish.

“There are now fake clone apps that scammers can use to create videos of P&L statements, ledgers, and other reports of trading platforms & bank accounts. Fake videos because they would seem more genuine than fake screenshots” said Zerodha’s Nithin Kamath on X, formerly Twitter.

How To Resist Deepfakes Following FBI and NSA Advice?

Here are some basic steps of preparation to identify, defend against, and respond to deepfake threats.

How to select and implement technologies to detect deepfake

- First and foremost, make a copy of the media prior to any analysis.

- Refer to both the original and the copy to verify an exact copy.

- Always try to check the source (i.e., is the organization or person reputable) of the media before drawing conclusions.

- Use Reverse Image Searches, like TinEye, Google Image Search, and Bing Visual Search, can be extremely useful if the media is a composition of images.

- Keep an eye out for physical properties that would not be possible, such as feet not touching the ground.

- Keep an eye out for the presence of audio filters, such as noise added for obfuscation.

- Complete checks to verify vanishing points, reflections, shadows, and more.

- Use tools designed to look for compression artefacts, knowing that lossy compression in media will inherently destroy lots of forensic artefacts.

- Consider plug-ins to detect suspected fake profile pictures.

What Can Policy Makers or Organisations Do?

Last week, Union IT minister Shri Ashwini Vaishnaw expressed concern while speaking to the media at an event.

He emphasised how an “extensive set of technologies” is already available to “automatically detect” deepfake content.

According to the Union Minister, India is all set to bring new legislation to control the menace soon.

While speaking to the media, Indian State of Uttar Pradesh, Superintendent of Police (SP) for cybercrimes Prof. Triveni Singh said, “Deepfake is a brute reality. No employee should believe any high-priority information or instruction without two or three-factor verification. Above all, awareness is key.”

The NSA and FBI co-authored paper advised the following steps for protecting organisations against deepfake attacks:

- Real-time verification

Companies and government departments should adopt identity verification for real-time communications, incorporating liveness in human faces and behaviour.

- Passive detection

For pre-existing media, forensic analysis, including making and hashing copies, source checking, reverse image searches (using tools like TinEye or Google Image Search), visual/audio examination for manipulation signs, and metadata examination could be greatly helpful.

Advanced methods include physics-based examinations (checking vanishing points, and reflections), compression artifact analysis, and content-based tools for specific manipulations.

- Protect public data of high-priority officers

To safeguard people from disinformation, use authentication techniques like watermarks and CAI standards.

Organizational security teams should plan and rehearse deepfake response strategies, report incidents to government partners, and train personnel in deepfake recognition and response.

Resources are available from the SANS Institute and MIT Media Lab.

- Cross-industry partnerships

The Coalition for Content Provenance and Authenticity (C2PA) develops standards and offers tools for media content provenance.

It is currently working with more than 1,000 companies.

FAQs

Can Deepfake be used for identity theft?

Yes. Deepfake technology can be used to create new identities and steal the identities of real people.

Attackers use the technology to create false documents or fake their victim’s voice, which enables them to create accounts or purchase products by pretending to be that person.

Is watching deepfake a crime?

Watching Deepfakes isn’t against the law universally, but it becomes illegal if you share or pass on any content that breaks any rules, like if it’s inappropriate and made without the person’s permission.

Is it safe to use deepfake app?

In general, using Deepfake apps is not illegal. Many people use Deepfake apps as photo editing tools or for harmless fun. However, it can be illegal if your deepfakes breach legal codes.

How to report deepfake videos in India?

You can report deepfake videos directly on the platform or report them to your city cyber cell.

The Government of India also advises users to immediately file an FIR at their police station. The social media platforms usually take against such reported videos within 24 hours of reporting.

How to be safe from AI-based deep fake calls?

While deep fake calls can be tricky, here are some tips on how to be safe from AI-based deep fake calls:

* Be suspicious of any calls from people that you do not know or that you do not expect. If someone claims to be a friend or family member but you’re unsure of their identity, ask them a personal question only they would know. If they are unable to provide a satisfactory answer, it’s probable that the call is fraudulent.

* Do not give out personal information or money to people that you do not know or trust. If someone calls you and asks for your personal information, such as your Social Security number or credit card number, or asks for money, be aware not to share.

* If you suspect a call might be a deep fake or fraudulent, it’s best to end the call immediately and avoid answering any subsequent calls from that number. You can also independently reach out to the person using a verified phone number that you know belongs to them to verify the identity. This way, you can confirm the authenticity of the call and protect yourself from potential scams or impersonation.

* Report any suspicious calls to the police. If you receive a call that you think may be a deep fake, report it to the police.

Additionally, be aware of the signs of a deep fake call. These signs can include:

* The caller’s voice may sound different than you remember it.

* The caller may be asking for personal information that they should not know.

* The caller may be asking you to do something that you would not normally do, such as send money or give out your credit card number.

Here’s what you should do if you get calls from +92 numbers.Details?

* Don’t answer the call with the +92 country code.

* Avoid messaging or calling back on that number.

* Immediately block and report the number.

* Inform WhatsApp about the calls or messages you received from the suspicious number via email.

What is behind the rise in Bollywood deepfakes?

The world of Bollywood, known for its glamour and entertainment, has been shaken by the malicious use of deepfake technology. Prominent actresses, including Rashmika Mandanna, Katrina, and Kajol, have been unwitting victims of this insidious trend.

A viral video featuring Rashmika Mandanna sparked concerns, leading PM Modi to emphasize the pivotal role of media in educating the public about the dangers of deepfake technology.

A morphed video of Rashmika Mandanna went viral, showcasing the advanced capabilities of deepfake technology. The video was manipulated to such an extent that distinguishing it from genuine footage became challenging.

Rashmika, deeply affected by the incident, took to her Instagram story to express her distress, highlighting the severe implications of such technology on personal and societal trust.

Kajol faced the repercussions of deepfake manipulation. The incident involved a British-Indian influencer Rosie Breen’s GRWM TikTok video turning into a nightmare, as Kajol’s face was seamlessly morphed into the footage.

Katrina Kaif’s case, a digitally altered image of the actress from her recently released film ‘Tiger 3’ showed the actress engaging in a fight with a stuntwoman clad in a towel, the edited version showed her wearing a low-cut white top and a matching bottom instead of the towel.

Also Read: Woman Loses Rs 80,000 to Online Scammers After Paying Rs 5 Handling Fee

Also Read: What is ‘look who just died’ scam on Facebook that is locking users out of their accounts: Here’s Everything You Need to Know

Also Read: Are You Receiving WhatsApp Calls From Unknown International Numbers? This Could Be A Scam but You Are Not Alone: Check What Can You Do For Your Safety