Highlights

- Apple removes apps used to create non-consensual nude images.

- Removed apps were marketed as “art generators” but functioned differently.

- Apple’s action follows similar AI misuse in Huawei’s smartphone.

- Apple responds after media exposure highlights app misuse.

Apple has removed three apps from its App Store that claimed to create non-consensual porn images of people.

It seems that after a few years of lapse of judgment, the world’s most valuable company is now more willing to tackle this harmful category of apps.

The Removed Apps

According to a report by 404Media, Apple eliminated three applications previously available on its digital store.

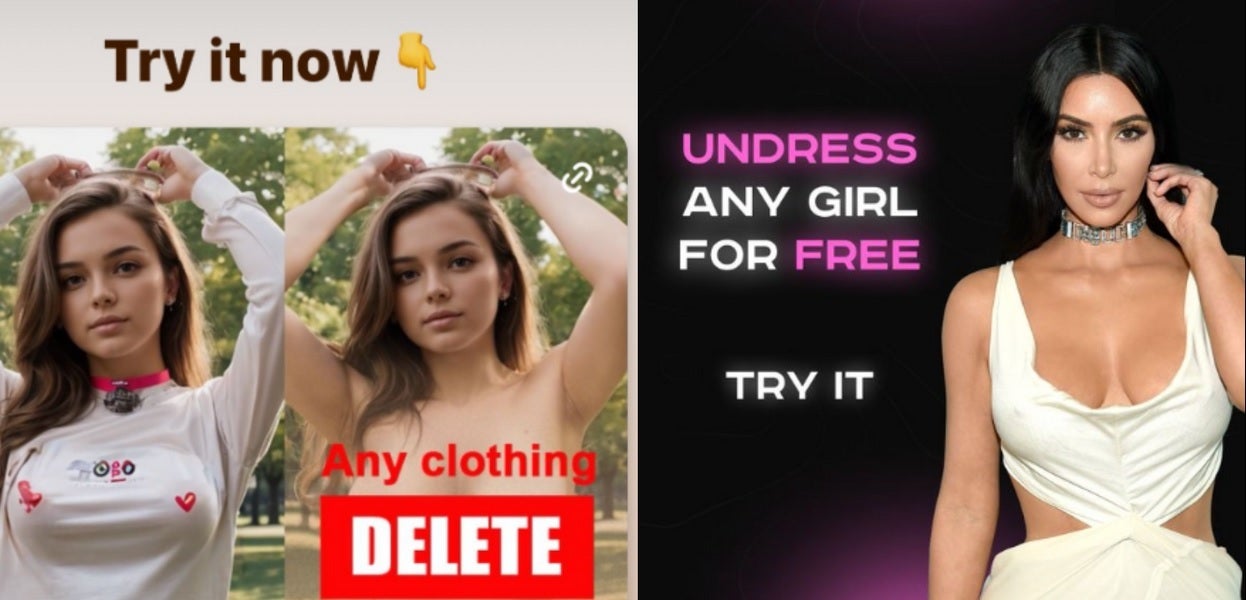

These apps were initially marketed on platforms like Instagram and adult websites, offering users the ability to “Undress any girl for free.”

Despite being labelled as “art generators” in the App Store, their actual function was to produce non-consensual nude images.

This move by Apple follows incidents where AI technology in Huawei’s new Pura 70 Ultra smartphone was reported to remove clothes from images, creating the illusion of nudity.

Huawei has acknowledged the issue and plans to modify the algorithm in an upcoming update.

The Ongoing Issue

This isn’t a new problem.

Similar apps have existed since 2022, and while they seemed innocent to Apple and Google initially, the developers were advertising their ability to create porn on adult sites.

Instead of removing the apps outright, the tech giants had previously allowed them to remain as long as they stopped advertising on porn sites.

The Crackdown

It was only after a media outlet provided Apple with links to the offending apps and their advertisements that the company took action and removed them.

This suggests Apple couldn’t identify these harmful apps on its own, a big problem while dealing with potential explicit content.

FAQs

What prompted Apple to remove these apps from the App Store?

Apple decided to remove the apps after reports surfaced that they were used to create non-consensual nude images, misleadingly marketed as “art generators.”

How were these harmful apps marketed to users?

These apps were advertised on social media and adult websites with claims that they could “undress any girl for free,” promoting their capability to generate nude images.

What were the implications of these apps on user privacy and consent?

The apps raised significant concerns about privacy and consent, as they allowed users to generate nude images of people without their permission, which is ethically and legally questionable.

Has Apple faced similar issues with apps before?

Yes, this is not the first instance. Similar apps have been on the App Store since 2022, but Apple initially allowed them to remain as long as they ceased advertising on adult platforms.

What steps is Apple taking to prevent similar apps from appearing on the App Store in the future?

While Apple has not detailed specific future actions, this incident highlights the need for stricter vetting and monitoring of app content and marketing practices to prevent misuse.

Also Read: Huawei P70 Series Launch Event Reportedly Canceled, Direct Sales Expected