Highlights

- ReALM transforms reference resolution into a language modeling task.

- Digitally reconstructs screen layout for enhanced interaction.

- Outperforms existing systems including GPT-4 in reference tasks.

- Promises more intuitive interactions with voice-activated systems.

Apple has taken a significant step forward in the realm of artificial intelligence with the development of ReALM (Reference Resolution as Language Modeling).

This advanced AI system is designed to transform the way voice assistants process and respond to user commands.

Apple’s ReALM: A Leap Forward in AI

At the core of ReALM’s innovation, as revealed by a research paper, is its approach to resolving references in human conversation.

This process, known as reference resolution, is crucial for understanding indirect speech such as pronouns or contextual cues.

It’s a feature that enables humans to communicate seamlessly but has long posed a challenge for digital assistants due to its reliance on deciphering a broad spectrum of verbal and visual signals.

The breakthrough presented by Apple involves reimagining reference resolution as a straightforward language modeling issue.

By doing so, ReALM is not only capable of grasping references to items on a display screen but also weaving this comprehension smoothly into ongoing dialogue.

How ReALM Works

ReALM’s mechanism begins with the digital reconstruction of a device’s screen layout in text form.

This method entails cataloguing visible entities and their spatial arrangement, then transcribing this visual data into a descriptive textual representation.

Such a strategy allows ReALM to “visualize” screen content, enhancing its ability to process user references to these elements accurately.

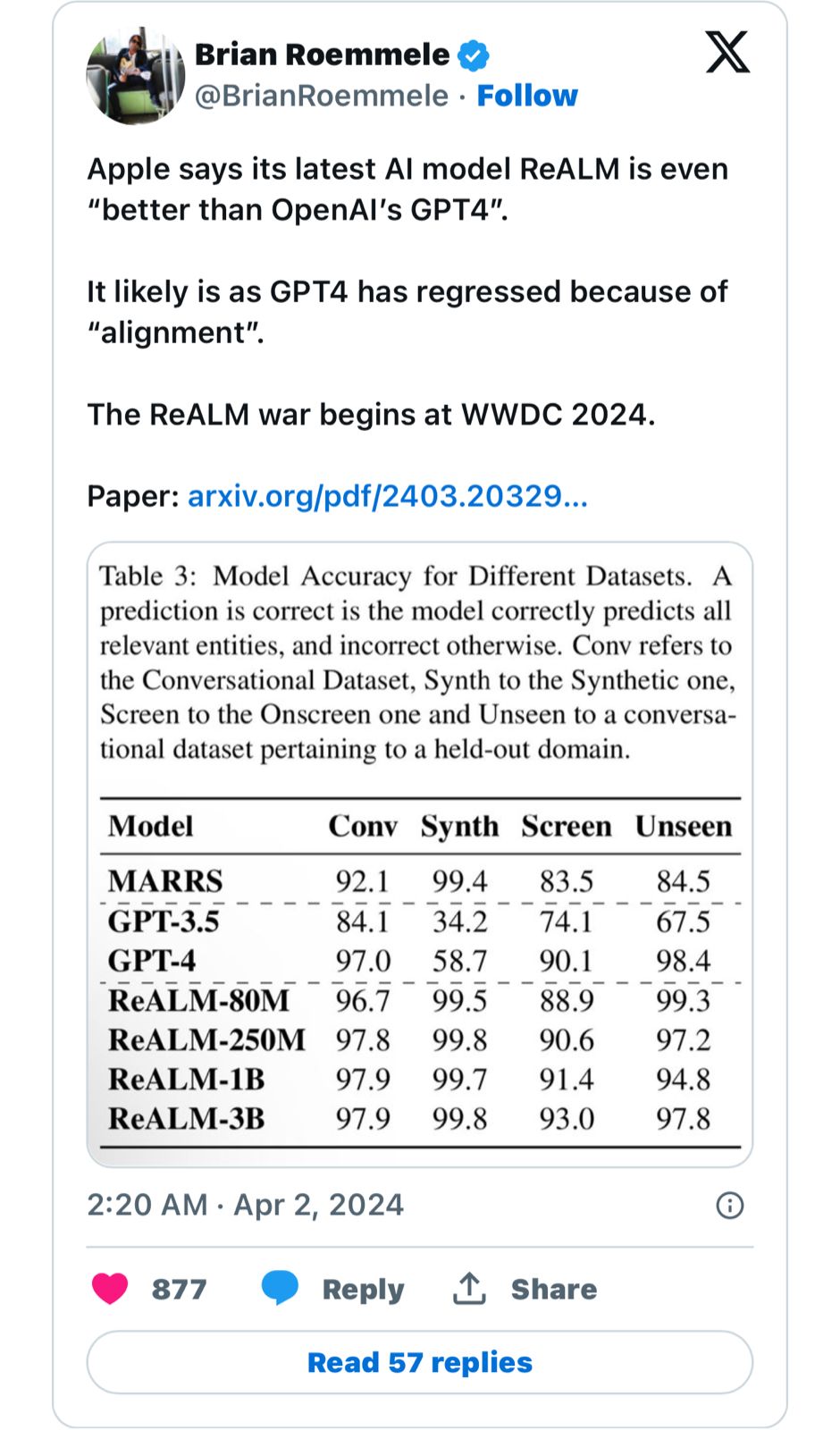

According to research shared by Apple, employing this technique alongside specialized training in reference resolution enables ReALM to surpass existing systems, including the notable GPT-4 by OpenAI, in performing these tasks.

The Implications of ReALM

The practical applications of ReALM are vast and varied. For everyday users, this could mean more straightforward interactions with voice-activated systems, allowing for commands that reference on-screen information without needing exact descriptions.

This advancement could be particularly beneficial in scenarios where hands-free operation is essential or where users may have difficulties with direct interaction, thereby broadening accessibility.

Looking Ahead

Apple’s introduction of ReALM is part of its ongoing exploration into integrating AI more deeply into its ecosystem.

Following the publication of multiple research papers on AI, including studies on integrating textual and visual data for language model training, Apple is poised to reveal further AI-driven innovations at WWDC this year.

FAQs

What sets Apple’s ReALM AI apart from existing language models?

Apple’s ReALM AI distinguishes itself by tackling the complex challenge of reference resolution in conversations.

Unlike traditional models, ReALM can interpret indirect speech, such as pronouns or contextual references, by digitally visualizing screen content.

This capability significantly improves how voice assistants understand and respond to commands, making interactions more natural.

How does ReALM improve interaction with voice assistants?

By converting the reference resolution process into a language modeling problem, ReALM enhances voice assistants’ ability to comprehend and act on commands referencing visual elements on a screen.

This breakthrough allows users to interact with their devices using natural language, reducing the need for precise, technical instructions.

In what ways could ReALM benefit users?

ReALM’s advanced understanding of references can simplify the use of voice-activated systems, especially in hands-free scenarios or for individuals who find direct interaction challenging.

This could include navigating car infotainment systems without distraction or aiding those with physical limitations by allowing more flexible command options.

What makes ReALM superior to other AI models like GPT-4?

ReALM’s unique approach to processing visual references and its integration into conversational context sets it apart from models like GPT-4.

Apple’s research indicates that ReALM’s method of screen layout reconstruction and specialized training in reference resolution tasks leads to a more accurate understanding of user commands.

Also Read: ChatGPT Introduces Read Aloud Feature for an Enhanced User Experience

Also Read: How To Generate Images Using ChatGPT: A Complete Guide