Highlights

- Kapwing’s research shows that over 20% of YouTube recommendations for new users are AI-generated “slop”.

- AI slop channels could collectively earn around $117M annually with India’s Bandar Apna Dost alone estimated at $4.25M.

- While YouTube is tightening policies and blocking fake channels, it also integrates AI tools like Google’s Veo 3 into Shorts.

It appears YouTube’s efforts to curb the spread of low-quality, mass-produced AI content may not be delivering the desired results. A new study suggests that the platform’s recommendation system continues to push so-called ‘AI slop’ videos to new users, despite tighter content policies.

AI-Generated Slop Video Dominates YouTube Recommendations

According to a report published by Kapwing, a video-editing company, more than one in five videos recommended to new YouTube users falls under the category of AI slop. The study analysed 15,000 of the world’s most popular YouTube channels to understand how widespread AI-generated low-quality content has become, as well as the scale of views and revenue such videos are generating.

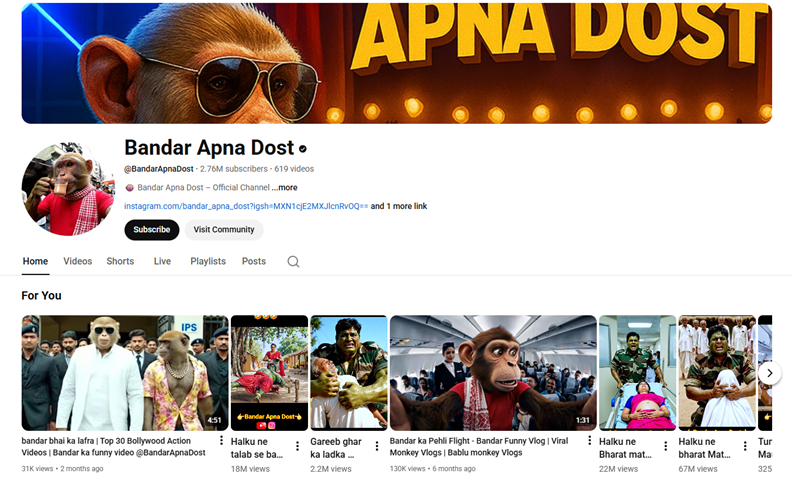

Kapwing’s research found that 278 out of the 15,000 channels studied uploaded exclusively AI slop content. Collectively, these channels have amassed around 63 billion views and gained approximately 221 million subscribers. The most-viewed AI slop channel on the platform is reportedly ‘Bandar Apna Dost’, which is based in India and has crossed 2.4 billion views. The channel’s AI-generated videos typically feature an anthropomorphic rhesus monkey alongside a Hulk-like muscular character battling demons.

Although YouTube’s current policies make AI slop videos ineligible for monetisation, Kapwing estimates that these channels could still be generating significant income. The report claims that AI slop channels on YouTube could collectively earn around $117 million per year with ‘Bandar Apna Dost’ alone potentially bringing in about $4.25 million annually.

To better understand the user experience, Kapwing’s researchers created a new YouTube account and monitored its recommendations. They found that 104 out of the first 500 videos suggested to the feed were AI slop, while nearly one-third of the remaining recommendations could be classified as ‘brain rot’ content.

The findings shed light on a rapidly expanding, semi-organised ecosystem that uses generative AI tools to farm engagement at scale. The report also points to a secondary layer of activity, largely driven by scammers, who sell paid courses and tips on how to create viral AI-generated videos.

What AI-Generated “Slop” Actually Means?

As AI-generated content has surged over the past year, the term ‘slop’ has gained mainstream recognition. American dictionary publisher Merriam-Webster named ‘slop’ its word of the year for 2025, defining it as “digital content of low quality that is produced usually in quantity by means of artificial intelligence.”

Describing the trend, Merriam-Webster noted, “The flood of slop in 2025 included absurd videos, off-kilter advertising images, cheesy propaganda, fake news that looks pretty real, junky AI-written books, “workslop” reports that waste coworkers’ time… and lots of talking cats. People found it annoying, and people ate it up.”

“It’s such an illustrative word. It’s part of a transformative technology, AI, and it’s something that people have found fascinating, annoying, and a little bit ridiculous,” Merriam-Webster president Greg Barlow was quoted as saying by The Associated Press.

While YouTube hosts a vast mix of authentic and inauthentic content, AI slop stands out as particularly concerning due to how easily it can be produced in large volumes using widely available AI tools. Complaints about feeds being flooded with AI-generated content have grown louder across major social media platforms including Instagram, X, and YouTube.

YouTube’s Response

In response to such criticism, platforms have attempted to limit the spread of low-quality AI content by tightening policies and enforcing takedowns. Earlier this month, YouTube reportedly blocked two large channels that were distributing fake, AI-generated movie trailers.

Responding to Kapwing’s report, YouTube said, “Generative AI is a tool, and like any tool it can be used to make both high- and low-quality content.” A company spokesperson added, “We remain focused on connecting our users with high-quality content, regardless of how it was made. All content uploaded to YouTube must comply with our community guidelines, and if we find that content violates a policy, we remove it,” as quoted by The Guardian.

FAQs

Q1. What percentage of YouTube recommendations for new users are AI-generated slop?

Answer. The study found that over 20% of recommended videos for new users fall under the category of AI slop.

Q2. How much revenue could AI slop channels potentially generate on YouTube?

Answer. Kapwing estimates these channels could collectively earn around $117 million annually, with Bandar Apna Dost alone possibly making $4.25 million per year.

Q3. How has YouTube responded to criticism about AI slop content?

Answer. YouTube has tightened policies, blocked fake AI‑generated channels, and stated that all content must comply with community guidelines, while also integrating AI tools like Google’s Veo 3 into Shorts.