Highlights

- Apple unveils MGIE, a groundbreaking AI model for text-driven image editing.

- MGIE demonstrates superior editing performance and efficiency in recent benchmarks.

- Open-source access to MGIE encourages innovation within the AI community.

- Apple’s strategic AI startup acquisitions position it as a leader in generative AI technology.

Apple, in collaboration with the University of California, Santa Barbara, has unveiled a pioneering open-source AI model designed to revolutionize how we approach image editing.

Named MLLM-Guided Image Editing (MGIE), this model introduces a novel way to manipulate images directly through text prompts, not unlike DALL-E or Midjourney.

Benchmarking Excellence in AI Image Editing

Detailed in a recently published conference paper, MGIE’s technological prowess was put to the test, showcasing substantial improvements in image editing performance across various metrics.

The model has been meticulously benchmarked, displaying not just an improvement over existing methods but also maintaining competitive efficiency in inference times.

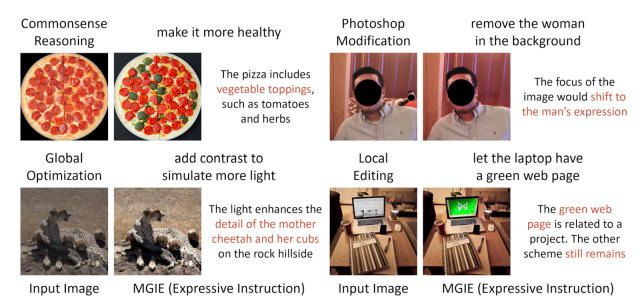

MGIE’s ability to execute Photoshop-style modifications, photo optimizations, and localised edits simply from prompts is likely to change the complexion of how we perceive photo editing.

Open-Source Accessibility and Future Prospects

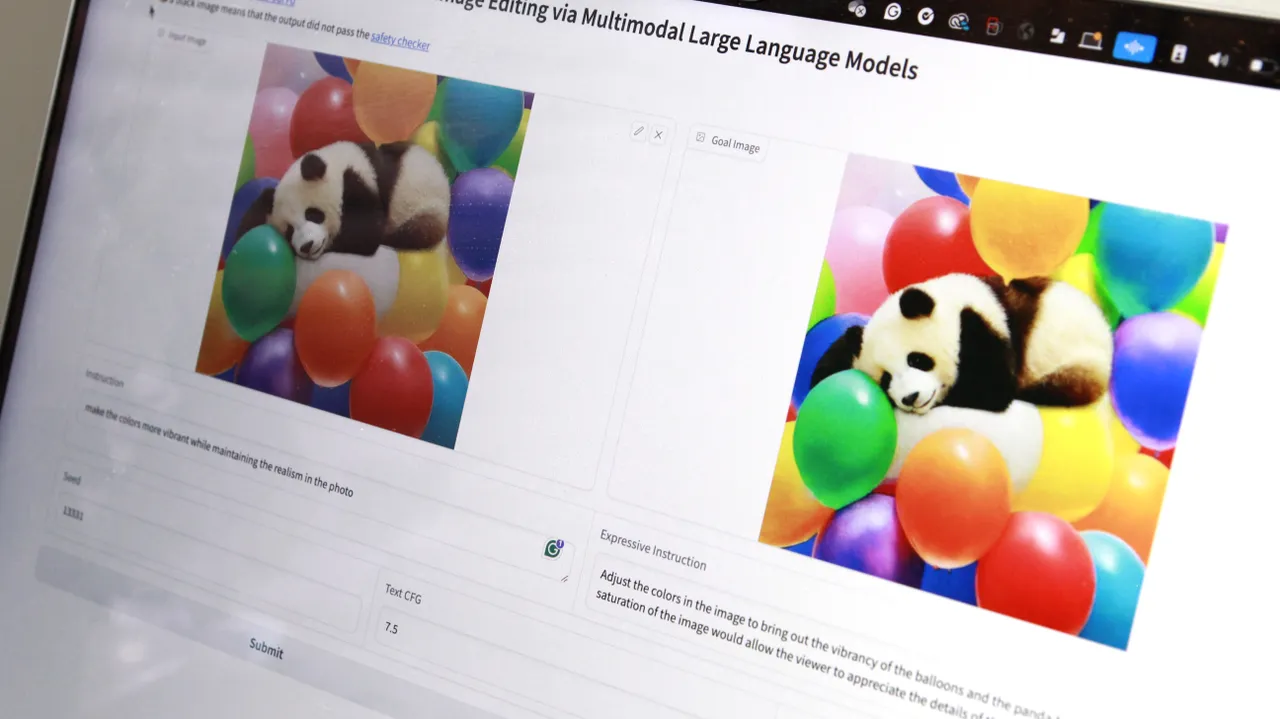

While MGIE is not yet broadly available as an official tool from Apple, the tech giant has made it accessible for technical exploration on GitHub, and a web demo is available on Hugging Face.

This move is part of Apple’s commitment to innovation and collaboration within the AI community.

Apple Playing the Long Game

The development of MGIE is part of Apple’s broader strategy to strengthen its position in the AI and machine learning arena.

The company was recently listed on the number 1 spot by Statista with the acquisitions of 32 AI startups in 2023 alone, surpassing the efforts of tech counterparts like Google, Meta, and Microsoft.

It is clear that Apple is aggressively expanding its AI capabilities with a long-term goal in mind.

These acquisitions, alongside advancements like MGIE, show Apple’s concerted effort to catch up with and potentially lead in the generative AI market, an area where its tech rivals like OpenAI have previously made more visible strides.

FAQs

What is the MGIE AI model?

MGIE, developed by Apple in collaboration with the University of California, Santa Barbara, is an open-source AI model that allows for image editing through text prompts, offering capabilities such as Photoshop-style modifications and photo optimizations.

How does MGIE compare to existing image editing methods?

MGIE has shown significant improvements over existing image editing methods in terms of performance and efficiency, as detailed in a recent conference paper.

Where can I access MGIE for technical exploration?

Apple has made MGIE available for technical exploration on GitHub, with a web demo also available on Hugging Face, showcasing its commitment to fostering innovation in AI.

How does MGIE’s development fit into Apple’s broader AI strategy?

The introduction of MGIE aligns with Apple’s strategy to enhance its standing in the AI and machine learning sector, demonstrated by its leading number of AI startup acquisitions in 2023, which aims to expand its capabilities and potentially lead in the generative AI market.

What makes MGIE’s approach to image editing unique?

MGIE’s unique approach lies in its ability to execute complex image editing tasks through simple text prompts, making sophisticated editing techniques more accessible and intuitive for users.

How is the Apple AI Model different from Google, Microsoft models?

The consumer-facing models or tools offered by Google and Microsoft only allows users to generate AI photos with text inputs. As far as editing is concerned, Microsoft recently announced Designer for Copilot, which is powered by DALL-E 3.

This tool can help users edit AI-generated images. Users can highlight an object to make it pop, add background blur and change the art style.

The Microsoft image editing features are available in English for users in India, Australia, New Zealand, the US and UK.

How does the Apple AI Model work?

MGIE, which stands for MLLM-Guided Image Editing, can be applied to make a simple photo dramatic.

As per the research paper, “instruction-based image editing improves the controllability and flexibility of image manipulation via natural commands without elaborate descriptions or regional masks.”

The researchers said that since, at times, human instructions are too brief for current methods to capture and follow, Apple’s multimodal large language model (MLLM) approach shows promising capabilities in cross-modal understanding and visual-aware response generation.

“MGIE learns to derive expressive instructions and provides explicit guidance. The editing model jointly captures this visual imagination and performs manipulation through end-to-end training,” the paper noted.

The researchers shared some examples. In one of them, they took a random photo of a man with a woman photobombing him.

A simple text input to “remove woman in the background” brushes off the person to make the image usable.

Similarly, an underexposed photo may be brightened up and get more contrast added by simple text input, like “add more contrast to simulate more light.”

Also Read: Apple GPT Breakthrough: Researchers Set to Bring Generative AI to iPhones

Also Read: Apple Reportedly Wants to Target News Archives to Train its AI Models

Also Read: Apple To Ramp Up AI Integration in 2024: New Tools and Features on the Horizon